(I apologize that some of my links to information about historical software require society accounts; if you know of freely accessible links, please let me know.)

While still at school in the 1960s I was lucky to land a summer job withr

IBM, in the days when peddle-operated computers were being replaced by steam-driven

systems.  Well, not quite! Computers in those days had many moving parts,

like tape drives, card readers and card punches, but they were driven

by electricity, not steam power. However, 1960s computers used transistors

instead of today's integrated circuits, and unlike the world-networked computers

of the 21st century, their only links

beyond the walls of their raised floor, specially air conditioned rooms was the electrical grid.

In the 1960s the Internet was still a

gleam in the eyes of military planners trying to ensure communications

continuity following a nuclear war. Input and output to almost all computers

was done using card readers and printers located inside computer rooms.

It wasn't until the 1970s that they could be accessed using

PC-like "terminals" with display screens and keyboards.

Well, not quite! Computers in those days had many moving parts,

like tape drives, card readers and card punches, but they were driven

by electricity, not steam power. However, 1960s computers used transistors

instead of today's integrated circuits, and unlike the world-networked computers

of the 21st century, their only links

beyond the walls of their raised floor, specially air conditioned rooms was the electrical grid.

In the 1960s the Internet was still a

gleam in the eyes of military planners trying to ensure communications

continuity following a nuclear war. Input and output to almost all computers

was done using card readers and printers located inside computer rooms.

It wasn't until the 1970s that they could be accessed using

PC-like "terminals" with display screens and keyboards.

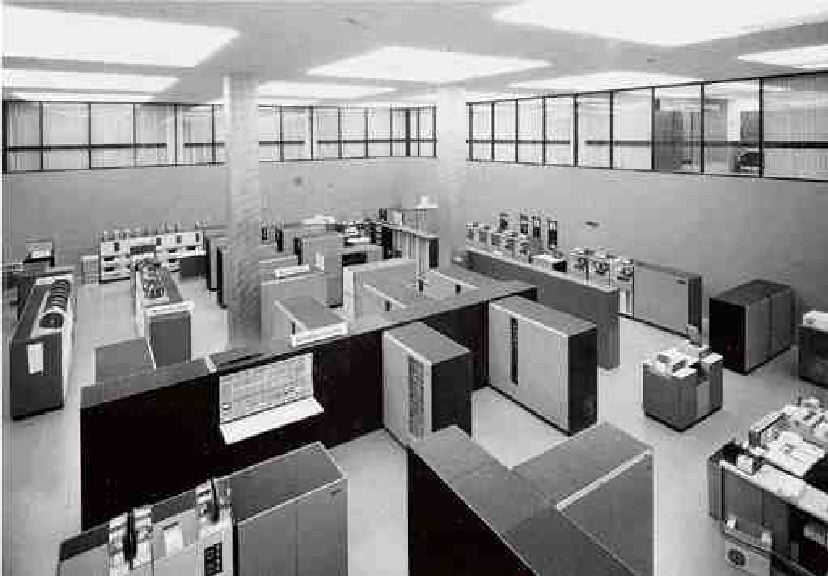

In 1968 I was attending the University of Waterloo, which had gained much

fame as a centre of computing excellence, in part by installing the second

most powerful computer in Canada  in a temple-like room in the centre of the new math building. You looked

down on that modern wonder of the world through a glass wall lining the

buildings second floor arcade. That computer contained what we then considered

to be an amazing "megabyte" of random access memory, aka RAM (which

was called "core"

memory in those days). Today even the cheapest smart phone

has thousands of times that much RAM.

in a temple-like room in the centre of the new math building. You looked

down on that modern wonder of the world through a glass wall lining the

buildings second floor arcade. That computer contained what we then considered

to be an amazing "megabyte" of random access memory, aka RAM (which

was called "core"

memory in those days). Today even the cheapest smart phone

has thousands of times that much RAM.

In 1965, University of Waterloo programmers (some say it was four undergraduates; others credit one or two professors) made a significant contribution to the usability of computers with their creation of the fast FORTRAN compiler called WATFOR, a revolutionary computer program that dramatically improved computers' responsiveness to student submissions.  Before the implementation of WATFOR:

Before the implementation of WATFOR:

Students would keypunch their solutions to computer programming exercises and hand in their card decks, which would eventually be taken into the computer room, fed into a card reader, and run overnight as "batch jobs". The students would return the next day to retrieve the printout that the computer run had produced.In those days students were well advised to "desk check" their keypunching for errors, because after a day's wait they might find that their work had been rejected just because of a missing comma or a misspelled name. They could usually keypunch corrections quickly, but after handing in their updated card decks they'd have to wait another day for the computer's response. WATFOR revolutionized the process:

Students lined up to hand their card decks to an operator who fed them directly into a card reader, and continued down the line to a printer where second operator returned their printed output.

Not only was the overnight wait time shrunk to the time for a short walk from card reader to printer, but the quality of output was improved, because the WATFOR compiler reacted to obvious typographical errors in student FORTRAN programs (like missing commas) by automatically making what were usually reasonable corrections.

My first paying job programming computers was in the summer of 1968 after my second year of university, when I was fortunate to be hired by IBM to to write programs for some of their new clients,  purchasers of IBM's then least expensive computers (for which IBM charged several thousand dollars a month). I was a novice, but so were the clients, so my lack of experience wasn't obvious to them. The computers I used that summer were about the size of a small minivan, with no hard disks or even tape drives to store information, just card readers, card punches and printers. Some of those computers had just 8K of random access memory, barely enough space to hold six or seven closely-typed pages of text. (8K is actually 8,192 bytes, not 8,000; at the time those extra 192 bytes could be critically important to squeezing in the solution!)

purchasers of IBM's then least expensive computers (for which IBM charged several thousand dollars a month). I was a novice, but so were the clients, so my lack of experience wasn't obvious to them. The computers I used that summer were about the size of a small minivan, with no hard disks or even tape drives to store information, just card readers, card punches and printers. Some of those computers had just 8K of random access memory, barely enough space to hold six or seven closely-typed pages of text. (8K is actually 8,192 bytes, not 8,000; at the time those extra 192 bytes could be critically important to squeezing in the solution!)

If you're computer-savy you may know that storing a character on a computer (e.g. on your own PC or on the Internet) takes an amount of computer memory known as a "byte" (at least for characters using the Latin alphabet). Some of you might also know that a byte contains eight binary digits, called "bits" for short. I sometimes joke that memory costs were so high then that bytes contained fewer than eight bits. Yeah, I know; that's a geek joke. (It's also illogical, because a "byte" is a mathematically-defined entity containing exactly eight bits.) But it is a fact that in those days instead of today's ASCII encoding, which represents each alphanumeric character in an eight-bit byte, the scheme called "binary-coded decimal" (or "BCD") was sometimes used to store each character in seven, or even six-bit chunks. Using six bits instead of eight reduced memory storage requirements by 25%.

Electronic computers were already being used for commercial purposes in the 1950s. Throughout that decade Grace Murray Hopper worked to improve their usability, realizing how "a much wider audience could use the computer if there were tools that were both programmer-friendly and application-friendly". Her tireless dedication to that goal led to the eventual definition of an industry-wide standard for COBOL, the first "English-like" programming language. My own earliest experience with COBOL was in 1970, in my third summer with IBM, when I worked on a team converting all of the application programs of Eaton's (at the time a major Canadian retail company), to one IBM operating system called called OS from another one called DOS (which was not the same as MS-DOS, which came years later, for PCs). My work on the Eaton's conversion didn't actually entail much COBOL programming. In the early 1990s I finally did some COBOL programming, for Sun Life, using a modernized version called COBOL II, whose improvements allowed the banishment of the GOTO statement. That long-established, apparently essential programming languge statement had been declared disreputable in Edgar Dijkstra's 1968 letter "GOTO Statement Considered Harmful" to the Communications of the Association for Computing Machinery showed that the GOTO statement made it hard for programmers reading text of their programs to follow what Dijkstra called the "progress of the process" that a program was modelling. His conclusion was initially debated, but the letter is now universally recognized as the clarion call that began what was soon known as the Structured Programming Revolution.

In the early 1970s, when I first read Dijkstra's letter I experienced an

epiphany, understanding his explanation of how and why the GOTO statement

actually made things more confusing. At the time I was a "systems programmer"

at Simpsons-Sears (Sears for short). Since my high-school programming debut

in 1963, the GOTO statement was the only way I knew to control which programming

language commands the computer would operate on next. The Assembler programming

language that we systems programmers used was missing the constructs  that Dijkstra had promoted as the safer, more intelligible alternatives

to the GOTO (e.g. IF THEN ELSE, DO WHILE, and CASE). Sears was then using

that same geeky Assembler programming language for the "application"

programs that were used to manage information for accounting, order processing,

and other corporate functions. But soon after I joined them we decided to

embrace "structured programming" and switch our application programmers

from using Assembler to a more modern than COBOL, GOTO-less programming

language called PL/I (pronounced "pea ell one"). While I was building

tools to increase the productivity of our PL/I-using applications programmers,

I discovered that IBM's "Concept

14" macros (super-geeks can find the actual macros here)

would allow even my Assembler language programs to be purged of !

that Dijkstra had promoted as the safer, more intelligible alternatives

to the GOTO (e.g. IF THEN ELSE, DO WHILE, and CASE). Sears was then using

that same geeky Assembler programming language for the "application"

programs that were used to manage information for accounting, order processing,

and other corporate functions. But soon after I joined them we decided to

embrace "structured programming" and switch our application programmers

from using Assembler to a more modern than COBOL, GOTO-less programming

language called PL/I (pronounced "pea ell one"). While I was building

tools to increase the productivity of our PL/I-using applications programmers,

I discovered that IBM's "Concept

14" macros (super-geeks can find the actual macros here)

would allow even my Assembler language programs to be purged of !

the unstructured "spaghetti code" that results from using GOTOs.

Macros provide a means for extended a programming language, and I used the

Concept 14 macros to clean up my own GOTO-infested Assembler programs. (To

be technically accurate, in Assembler, the GOTO equivalent is the "branch"

statement). Years later at Art Benjamin Associates I used a similar set

of Assembler macros to help me build commercial programmer productivity

tools that were part of the ACT/1

information systems prototyper [hyperlink requires an ACM account].

One of those tools was a "syntax-directed, recursive-descent compiler",

a name to me so impressive-sounding that I tried (unsuccessfully) to present

a paper on it at an international conference.

the unstructured "spaghetti code" that results from using GOTOs.

Macros provide a means for extended a programming language, and I used the

Concept 14 macros to clean up my own GOTO-infested Assembler programs. (To

be technically accurate, in Assembler, the GOTO equivalent is the "branch"

statement). Years later at Art Benjamin Associates I used a similar set

of Assembler macros to help me build commercial programmer productivity

tools that were part of the ACT/1

information systems prototyper [hyperlink requires an ACM account].

One of those tools was a "syntax-directed, recursive-descent compiler",

a name to me so impressive-sounding that I tried (unsuccessfully) to present

a paper on it at an international conference.

Some of the most important historical notes about the comparatively young field of computing: In the 1940s a computer at Bletchley Park, England was used to decipher the German's wartime "Enigma" code. But the acknowledged "father of computing" was the 18th century mathematician Charles Babbage. In 1821, at a time when "computers" were the people who calculated the numbers to be listed in mathematical tables, Babbage invented the Difference Engine to compile those mathematical tables. His invention remained just a brilliant theoretical construct until 1985 when a model was actually built from Babbage's detailed plans.

In a slightly more technical story than this one, I've written about one of my own software contributions in the early days of computing, which I called the Zero Level Interrupt Handler.

Many of my computer software constructs have been tools to enhance the productivity of computer users.

You can read about DBAid for IMS, a programmer productivity tool I built that became a commercial

software product.

I am an instructional designer and computer softsmith, B Math (University of Waterloo). I have implemented application and system software on several computing platforms including IBM mainframes, MS Windows, MacOS, and UNIX. In recent years I have been teaching and developing online courses.